Today in Character Technology - 5.16.25

Long-term Dyadic Human Motion Synthesis, Stylized Gaussian Blendshapes, VR-sketches to 3D Garments, and 4D Motion Tokenization

Happy Friday!

First up today - a paper from my former colleagues at Sony AI. Dyadic Mamba: long-term Dyadic Human Motion Synthesis, focuses on synthesizing animation for two people interacting based on text descriptions of the action. They mention a supplemental video, however that does not appear to have been released anywhere but the paper will be presented at CVPR 2025.

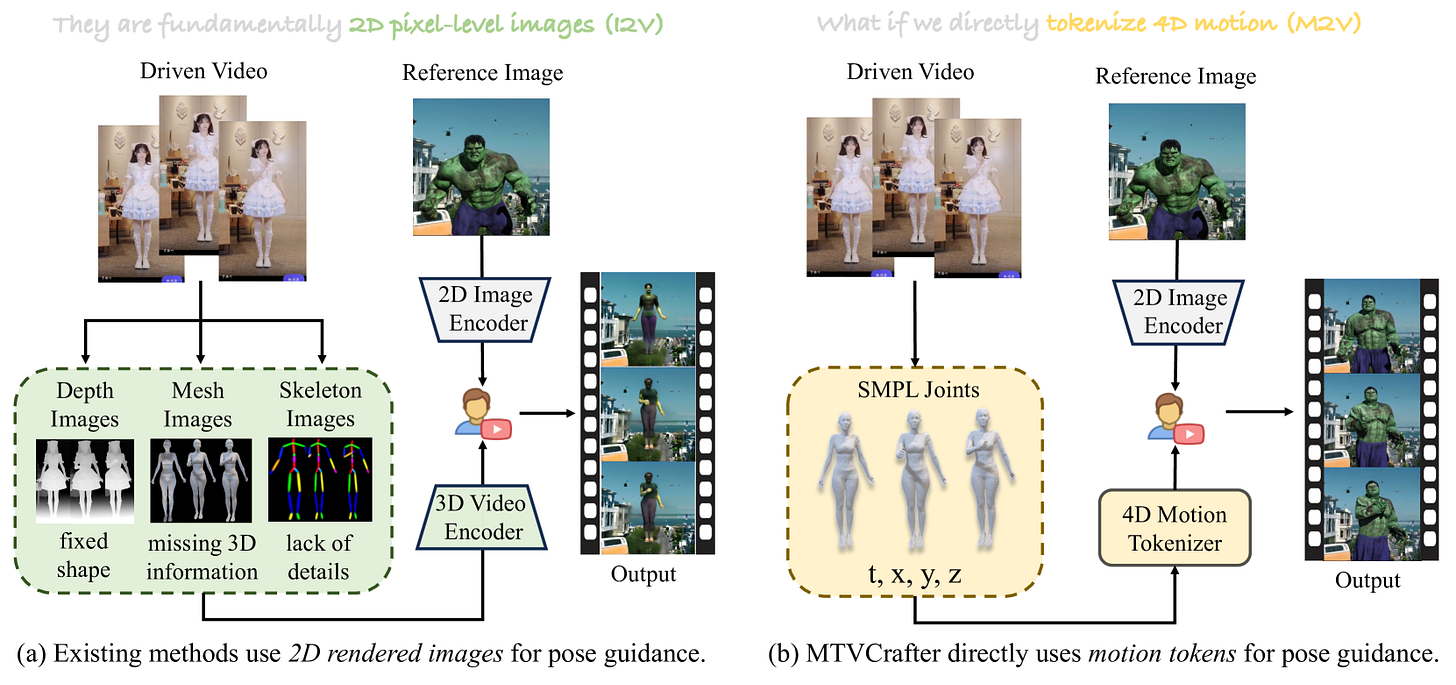

In a similar category, we see MTVCrafter: 4D Motion Tokenization for Open-World Human Image Animation. As you likely know - many of the papers out there that take an input video and drive a 2D Avatar have a 2D or 3D skeleton in the mix, often via existing models like OpenPose, or customized models. This paper focuses on an approach that works for both 2D & 3D Applications.

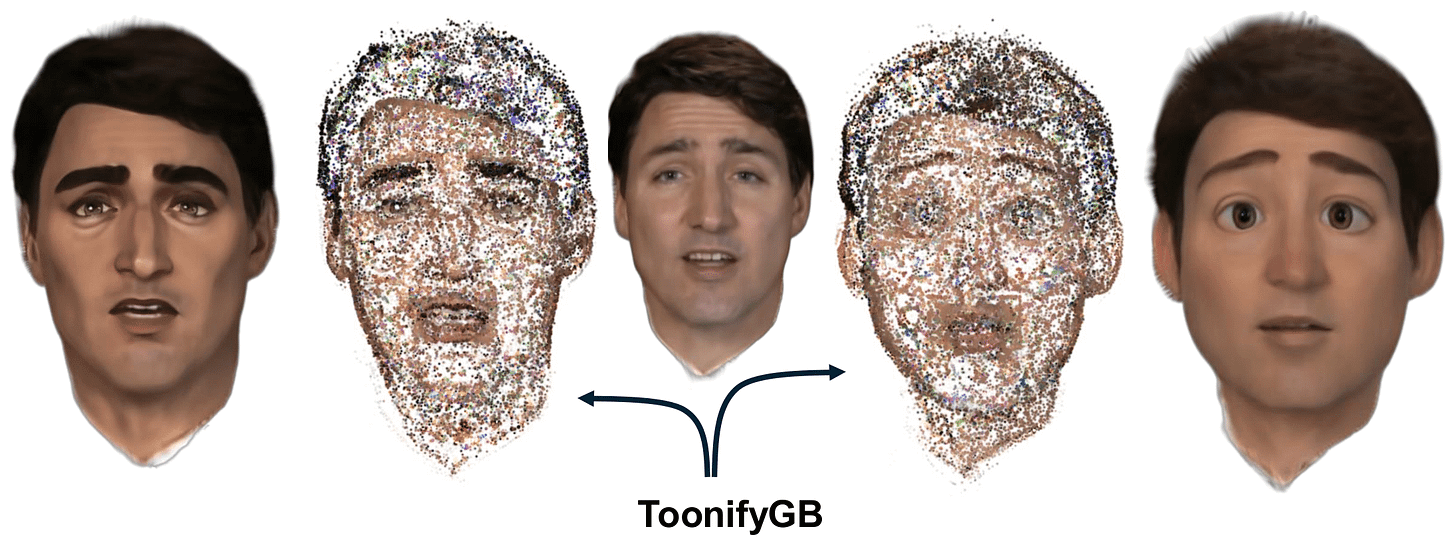

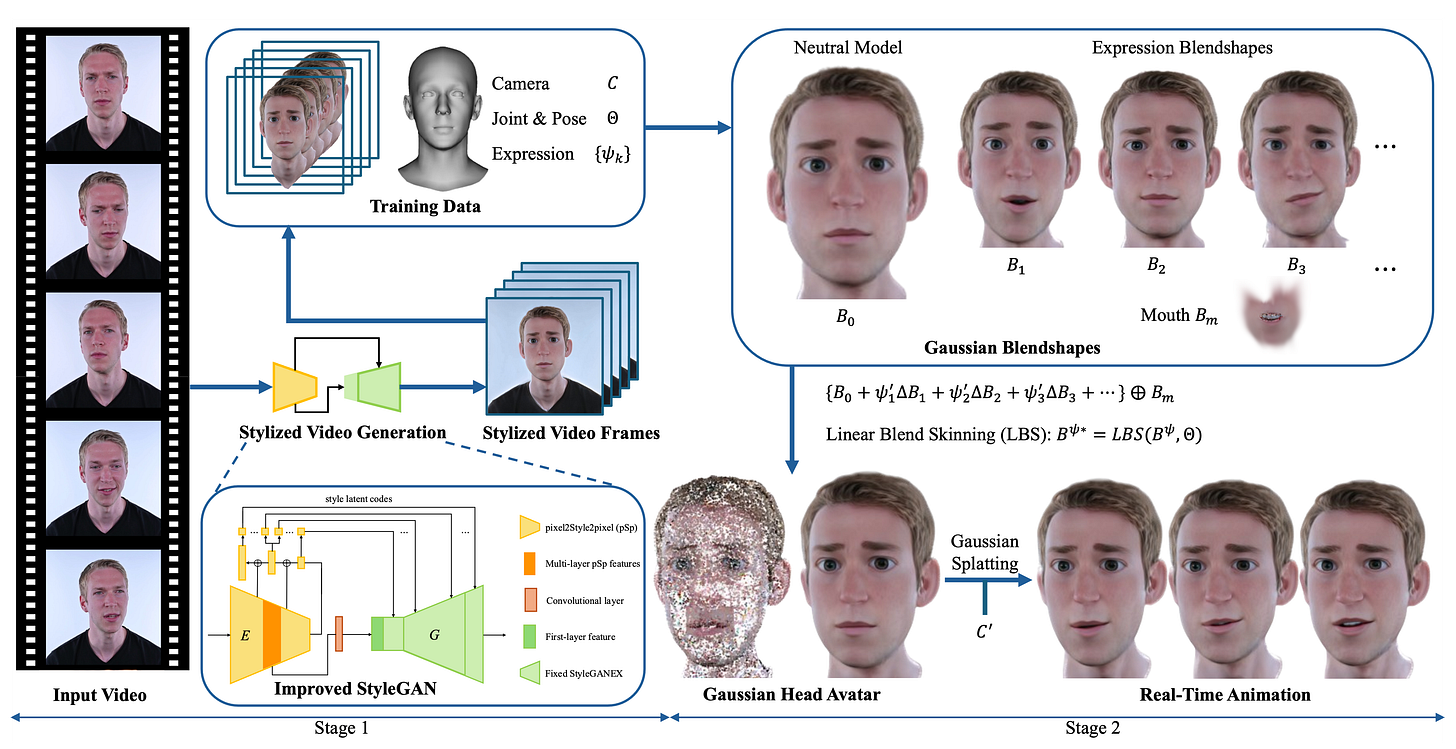

Then, something for those more interested in character creation as opposed to animation: ToonifyGB extends 2020’s Toonify to Blendshape driven 3D Gaussian Avatars.

Lastly, creating 3D Garments from 3D VR line drawings, for those in the AR/VR character space.

Cheers

Dyadic Mamba: Long-term Dyadic Human Motion Synthesis

Julian Tanke, Takashi Shibuya, Kengo Uchida, Koichi Saito, Yuki Mitsufuji

Sony AI

Sony Group Corporation

🚧 Project: N/A

📄 Paper: https://arxiv.org/pdf/2505.09827

💻 Code: N/A

❌ ArXiv: https://arxiv.org/abs/2505.09827

Generating realistic dyadic human motion from text descriptions presents significant challenges, particularly for extended interactions that exceed typical training sequence lengths. While recent transformer-based approaches have shown promising results for short-term dyadic motion synthesis, they struggle with longer sequences due to inherent limitations in positional encoding schemes. In this paper, we introduce Dyadic Mamba, a novel approach that leverages State-Space Models (SSMs) to generate high-quality dyadic human motion of arbitrary length. Our method employs a simple yet effective architecture that facilitates information flow between individual motion sequences through concatenation, eliminating the need for complex cross-attention mechanisms. We demonstrate that Dyadic Mamba achieves competitive performance on standard short-term benchmarks while significantly outperforming transformer-based approaches on longer sequences. Additionally, we propose a new benchmark for evaluating long-term motion synthesis quality, providing a standardized framework for future research. Our results demonstrate that SSM-based architectures offer a promising direction for addressing the challenging task of long-term dyadic human motion synthesis from text descriptions.

MTVCrafter: 4D Motion Tokenization for Open-World Human Image Animation

🚧 Project: https://anonymous.4open.science/r/MTVCrafter-1B13/README.md

📄 Paper: https://arxiv.org/pdf/2505.10238

💻 Code: https://anonymous.4open.science/r/MTVCrafter-1B13/README.md

❌ ArXiv: https://arxiv.org/abs/2505.10238

Human image animation has gained increasing attention and developed rapidly due to its broad applications in digital humans. However, existing methods rely largely on 2D-rendered pose images for motion guidance, which limits generalization and discards essential 3D information for open-world animation. To tackle this problem, we propose MTVCrafter (Motion Tokenization Video Crafter), the first framework that directly models raw 3D motion sequences (i.e., 4D motion) for human image animation. Specifically, we introduce 4DMoT (4D motion tokenizer) to quantize 3D motion sequences into 4D motion tokens. Compared to 2D-rendered pose images, 4D motion tokens offer more robust spatio-temporal cues and avoid strict pixel-level alignment between pose image and character, enabling more flexible and disentangled control. Then, we introduce MV-DiT (Motion-aware Video DiT). By designing unique motion attention with 4D positional encodings, MV-DiT can effectively leverage motion tokens as 4D compact yet expressive context for human image animation in the complex 3D world. Hence, it marks a significant step forward in this field and opens a new direction for pose-guided human video generation. Experiments show that our MTVCrafter achieves state-of-the-art results with an FID-VID of 6.98, surpassing the second-best by 65%. Powered by robust motion tokens, MTVCrafter also generalizes well to diverse open-world characters (single/multiple, full/half-body) across various styles and scenarios.

ToonifyGB: StyleGAN-based Gaussian Blendshapes for 3D Stylized Head Avatars

Rui-Yang Ju, Sheng-Yen Huang, Yi-Ping Hung

National Taiwan University

Taipei City, Taiwan

🚧 Project: N/A

📄 Paper: https://arxiv.org/pdf/2505.10072

💻 Code: N/A

❌ ArXiv: https://arxiv.org/abs/2505.10072

The introduction of 3D Gaussian blendshapes has enabled the real-time reconstruction of animatable head avatars from monocular video. Toonify, a StyleGAN-based framework, has become widely used for facial image stylization. To extend Toonify for synthesizing diverse stylized 3D head avatars using Gaussian blendshapes, we propose an efficient two-stage framework, ToonifyGB. In Stage 1 (stylized video generation), we employ an improved StyleGAN to generate the stylized video from the input video frames, which addresses the limitation of cropping aligned faces at a fixed resolution as preprocessing for normal StyleGAN. This process provides a more stable video, which enables Gaussian blendshapes to better capture the high-frequency details of the video frames, and efficiently generate high-quality animation in the next stage. In Stage 2 (Gaussian blendshapes synthesis), we learn a stylized neutral head model and a set of expression blendshapes from the generated video. By combining the neutral head model with expression blendshapes, ToonifyGB can efficiently render stylized avatars with arbitrary expressions. We validate the effectiveness of ToonifyGB on the benchmark dataset using two styles: Arcane and Pixar.

From Air to Wear: Personalized 3D Digital Fashion with AR/VR Immersive 3D Sketching

Ying Zang, Yuanqi Hu, Xinyu Chen, Yuxia Xu, Suhui Wang, Chunan Yu, Lanyun Zhu, Deyi Ji, Xin Xu, Tianrun Chen

🚧 Project: N/A

📄 Paper: https://arxiv.org/pdf/2505.09998

💻 Code: N/A

❌ ArXiv: https://arxiv.org/abs/2505.09998

In the era of immersive consumer electronics, such as AR/VR headsets and smart devices, people increasingly seek ways to express their identity through virtual fashion. However, existing 3D garment design tools remain inaccessible to everyday users due to steep technical barriers and limited data. In this work, we introduce a 3D sketch-driven 3D garment generation framework that empowers ordinary users—even those without design experience—to create high-quality digital clothing through simple 3D sketches in AR/VR environments. By combining a conditional diffusion model, a sketch encoder trained in a shared latent space, and an adaptive curriculum learning strategy, our system interprets imprecise, free-hand input and produces realistic, personalized garments. To address the scarcity of training data, we also introduce KO3DClothes, a new dataset of paired 3D garments and user-created sketches. Extensive experiments and user studies confirm that our method significantly outperforms existing baselines in both fidelity and usability, demonstrating its promise for democratized fashion design on next-generation consumer platforms.

Adjacent Research

TKFNet: Learning Texture Key Factor Driven Feature for Facial Expression Recognition

This paper introduces TKFNet, a framework that enhances facial expression recognition by focusing on localized texture regions—termed Texture Key Driver Factors (TKDF)—which are critical for distinguishing subtle emotional cues. The architecture combines a Texture-Aware Feature Extractor and Dual Contextual Information Filtering to capture fine-grained textures and contextual information, achieving state-of-the-art results on RAF-DB and KDEF datasets.ar5iv+5arXiv+5arXiv+5

Large-Scale Gaussian Splatting SLAM

LSG-SLAM is a novel visual SLAM system that leverages 3D Gaussian Splatting with stereo cameras to perform robust, large-scale outdoor mapping. It introduces continuous submaps, feature-alignment warping constraints, and loop closure techniques to handle unbounded scenes efficiently, demonstrating superior performance over existing methods on EuRoc and KITTI datasets

Depth Anything with Any Prior

This paper introduces "Prior Depth Anything," a framework that fuses precise but sparse depth measurements with relative depth predictions to generate accurate, dense metric depth maps. Utilizing a coarse-to-fine pipeline, the method achieves strong zero-shot generalization across tasks like depth completion, super-resolution, and inpainting, outperforming task-specific models on seven real-world datasets.

VRSplat: Fast and Robust Gaussian Splatting for Virtual Reality

VRSplat enhances 3D Gaussian Splatting for virtual reality by integrating techniques like Mini-Splatting, StopThePop, and Optimal Projection, along with a novel foveated rasterizer. This approach addresses VR-specific challenges such as temporal artifacts and projection distortions, achieving over 72 FPS and improved visual stability in head-mounted displays.

StoryReasoning Dataset: Using Chain-of-Thought for Scene Understanding and Grounded Story Generation

The StoryReasoning dataset comprises 4,178 stories derived from 52,016 movie images, designed to improve visual storytelling by maintaining character and object consistency across frames. It employs chain-of-thought reasoning and grounding techniques, enabling models like the fine-tuned Qwen Storyteller to reduce referential hallucinations and enhance narrative coherence.

![[Uncaptioned image] [Uncaptioned image]](https://substackcdn.com/image/fetch/$s_!NGZI!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F46d4c6fd-b043-4709-98bf-19bb8deff02b_1426x703.png)

![[Uncaptioned image] [Uncaptioned image]](https://substackcdn.com/image/fetch/$s_!UNCT!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F8aba1473-6bbd-40e5-9481-c4a3e1c1af0f_1660x374.png)