Today in Character Technology - 6.9.25

Relightable Gaussian Avatars, Low-Latency 2D Talking Heads, and an automated pipeline for generating diverse and photorealistic 4D human animations

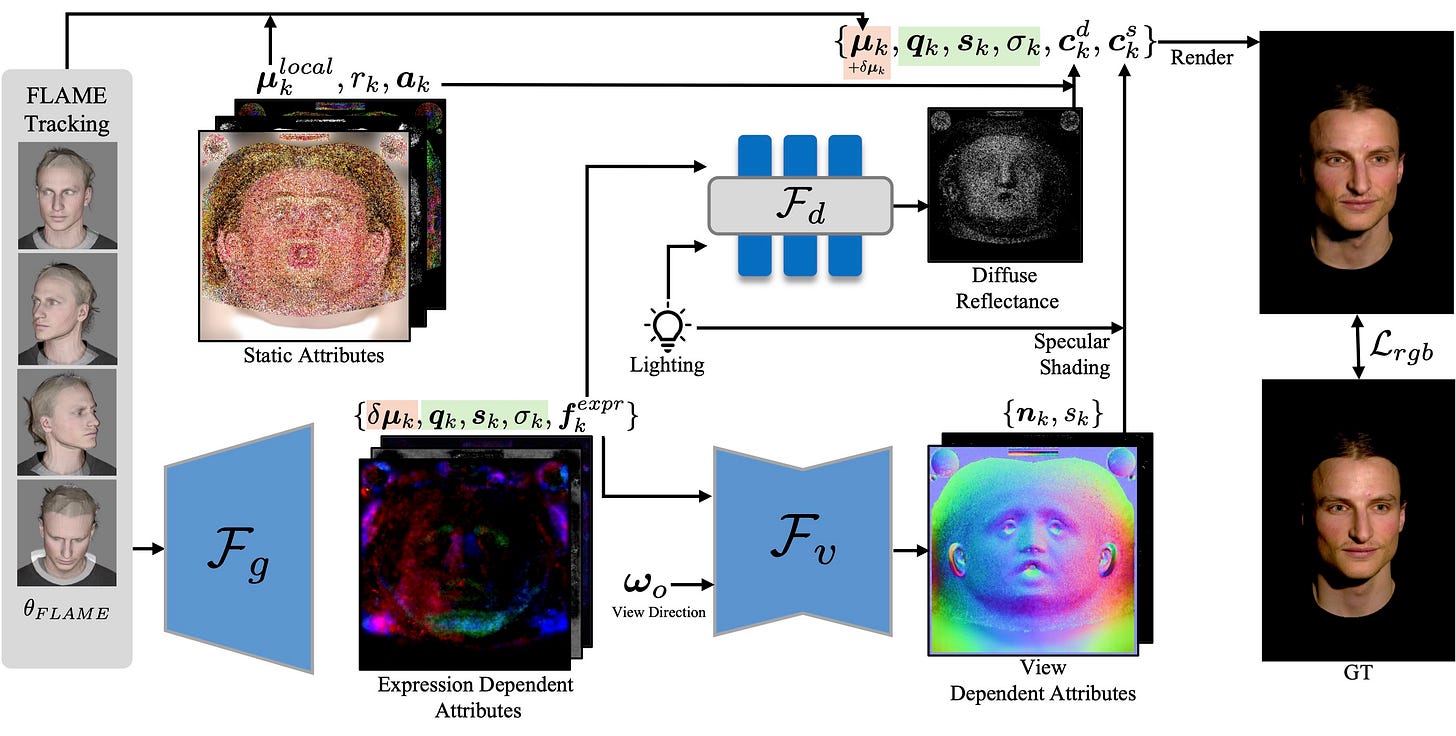

Today (or Yesterday, technically, since I’m posting this late) we start off with a compelling new Gaussian Avatar paper - BecomingLit which focuses on lower cost data acquisition, a “hybrid neural shading” approach, and monocular video control. You’ll recall others are operating in this space as well, not the least of which is Meta Reality Labs’s Relightable Gaussian Codec Avatars from CVPR 2024, which still demonstrates arguably the highest fidelity results.

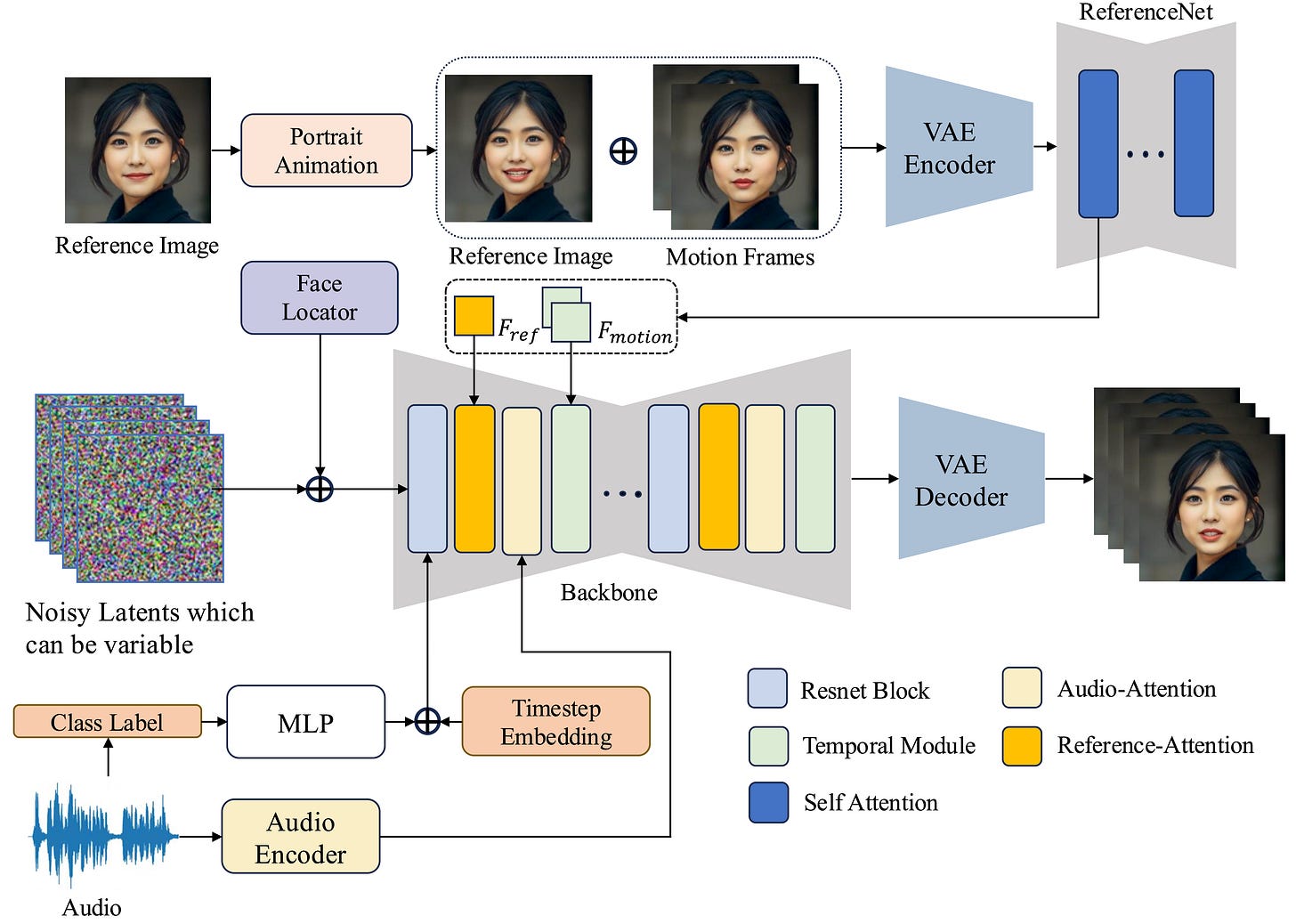

After that, “LLIA — Enabling Low-Latency Interactive Avatars” at first glance may look like a step backwards in terms of 2D talking heads, however you’ll note one of it’s key contributions is the ability to run at 45fps on a 4090 - participating in the inevitable trend towards deomcratized realtime talking heads.

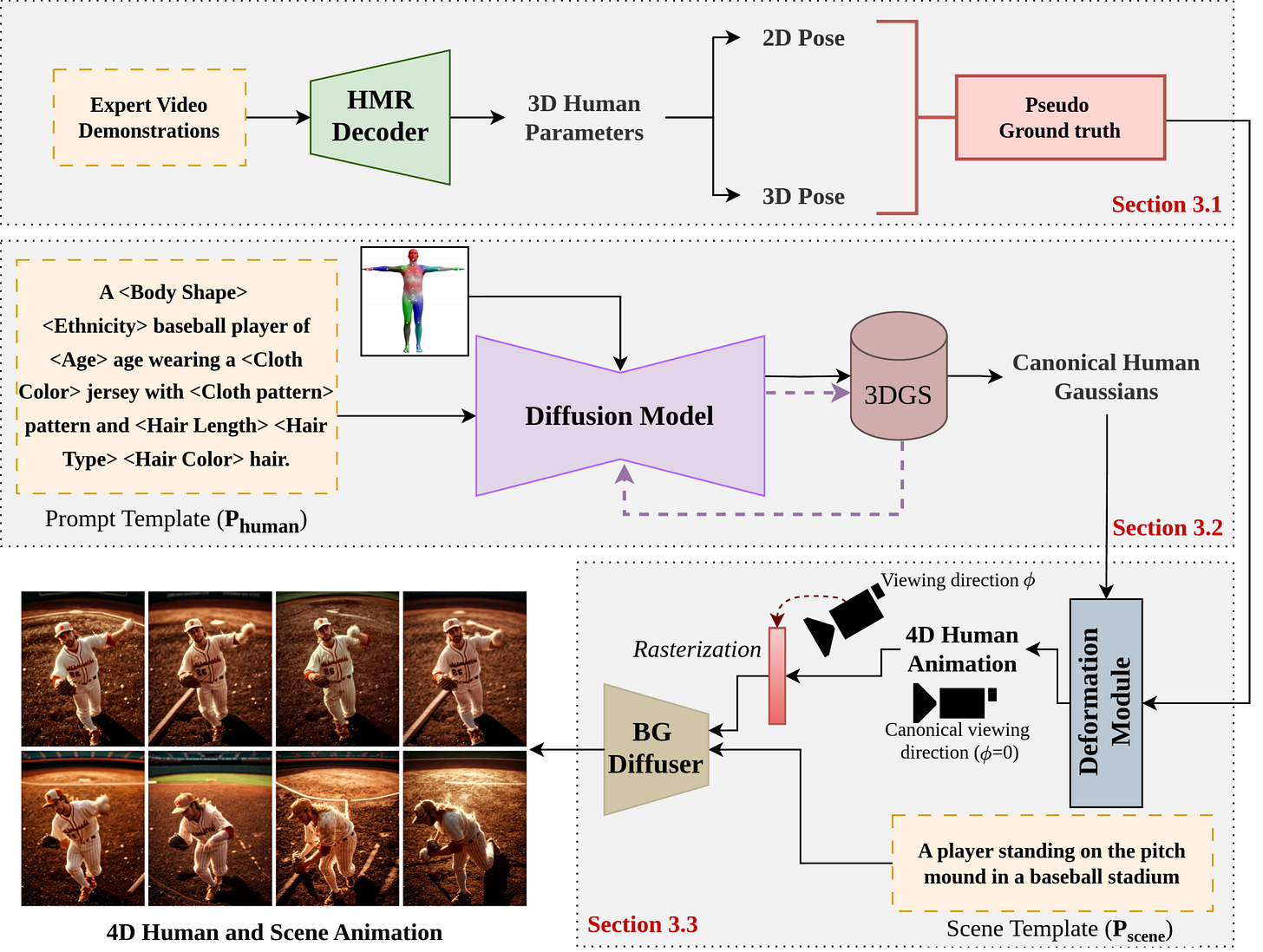

Gen4D introduces what they claim to be an “automated pipeline for generating diverse and photorealistic 4D human animations”, however unfortunately offers no video or code in their paper to support the claim. ¯\_(ツ)_/¯

Enjoy!

BecomingLit: Relightable Gaussian Avatars with Hybrid Neural Shading

Jonathan Schmidt, Simon Giebenhain, Matthias Nießner

Technical University of Munich

🚧 Project: https://jonathsch.github.io/becominglit

📄 Paper: https://arxiv.org/pdf/2506.06271

💻 Code: https://github.com/jonathsch/becominglit (coming soon)

❌ ArXiv: https://arxiv.org/abs/2506.06271

We introduce BecomingLit, a novel method for reconstructing relightable, high-resolution head avatars that can be rendered from novel viewpoints at interactive rates. Therefore, we propose a new low-cost light stage capture setup, tailored specifically towards capturing faces. Using this setup, we collect a novel dataset consisting of diverse multi-view sequences of numerous subjects under varying illumination conditions and facial expressions. By leveraging our new dataset, we introduce a new relightable avatar representation based on 3D Gaussian primitives that we animate with a parametric head model and an expression-dependent dynamics module. We propose a new hybrid neural shading approach, combining a neural diffuse BRDF with an analytical specular term. Our method reconstructs disentangled materials from our dynamic light stage recordings and enables all-frequency relighting of our avatars with both point lights and environment maps. In addition, our avatars can easily be animated and controlled from monocular videos. We validate our approach in extensive experiments on our dataset, where we consistently outperform existing state-of-the-art methods in relighting and reenactment by a significant margin.

LLIA — Enabling Low-Latency Interactive Avatars: Real-Time Audio-Driven Portrait Video Generation with Diffusion Models

Haojie Yu, Zhaonian Wang, Yihan Pan, Meng Cheng, Hao Yang, Chao Wang, Tao Xie, Xiaoming Xu, Xiaoming Wei, Xunliang Cai

Meituan Inc.

🚧 Project: https://meigen-ai.github.io/llia/

📄 Paper: https://arxiv.org/pdf/2506.05806

💻 Code: https://github.com/MeiGen-AI/llia

❌ ArXiv: https://arxiv.org/abs/2506.05806

Diffusion-based models have gained wide adoption in the virtual human generation due to their outstanding expressiveness. However, their substantial computational requirements have constrained their deployment in real-time interactive avatar applications, where stringent speed, latency, and duration requirements are paramount. We present a novel audio-driven portrait video generation framework based on the diffusion model to address these challenges. Firstly, we propose robust variable-length video generation to reduce the minimum time required to generate the initial video clip or state transitions, which significantly enhances the user experience. Secondly, we propose a consistency model training strategy for Audio-Image-to-Video to ensure real-time performance, enabling a fast few-step generation. Model quantization and pipeline parallelism are further employed to accelerate the inference speed. To mitigate the stability loss incurred by the diffusion process and model quantization, we introduce a new inference strategy tailored for long-duration video generation. These methods ensure real-time performance and low latency while maintaining high-fidelity output. Thirdly, we incorporate class labels as a conditional input to seamlessly switch between speaking, listening, and idle states. Lastly, we design a novel mechanism for fine-grained facial expression control to exploit our model's inherent capacity. Extensive experiments demonstrate that our approach achieves low-latency, fluid, and authentic two-way communication. On an NVIDIA RTX 4090D, our model achieves a maximum of 78 FPS at a resolution of 384×384 and 45 FPS at a resolution of 512×512, with an initial video generation latency of 140 ms and 215 ms, respectively.

Gen4D: Synthesizing Humans and Scenes in the Wild

Jerrin Bright, Zhibo Wang, Yuhao Chen, Sirisha Rambhatla, John Zelek, David Claasi

Vision and Image Processing Lab

Critical ML Lab

University of Waterloo, Canada

🚧 Project: N/A

📄 Paper: https://arxiv.org/pdf/2506.05397

❌ ArXiv: https://arxiv.org/abs/2506.05397

Lack of input data for in‑the‑wild activities often results in low performance across various computer vision tasks. This challenge is particularly pronounced in uncommon human‑centric domains like sports, where real‑world data collection is complex and impractical. While synthetic datasets offer a promising alternative, existing approaches typically suffer from limited diversity in human appearance, motion, and scene composition due to their reliance on rigid asset libraries and hand‑crafted rendering pipelines. To address this, we introduce Gen4D, a fully automated pipeline for generating diverse and photorealistic 4D human animations. Together, Gen4D and SportPAL provide a scalable foundation for constructing synthetic datasets tailored to in‑the‑wild human‑centric vision tasks, with no need for manual 3D modeling or scene design.

![[Uncaptioned image] [Uncaptioned image]](https://substackcdn.com/image/fetch/$s_!Eryt!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fbae9e06d-8d14-4639-8182-3763bf7cc339_1661x1013.png)