Today in Character Technology - 5.14.25

Simulating Knots, Heterogeneous motion transfer, and more human gesture prediction.

In an unusual turn of events, there was only one paper released today that was particularly relevant to Character Tech - M3G out of The Hong Kong University of Science and Technology focuses on a new method for Co-Speech Gesture Synthesis.

They’ve not publicly shared any code or even supplementary video material, which is unfortunate for a paper focused on something that can really only be evaluated with video. On occasion papers are submitted and then later modified to include videos and project pages, so I’ll post an update if we get something more.

In the meantime, many researchers are learning and announcing their papers have been accepted to SIGGRAPH 2025, so in honor of that let’s have a look at two of them.

First up, some beautiful simulation work by the folks at Style3D Research, Peking University and University of Utah: Fast Physics-Based Modeling of Knots and Ties Using Templates.

Style3D, who’s research is headed by highly regarded academic and chief scientist Huamin Wang, has released a number of very impressive, high fidelity cloth simulation products including a realtime simulator for UE5. Congrats on their paper’s acceptance!

Then for the Gen2D fans and observers “FlexiAct: Towards Flexible Action Control in Heterogeneous Scenarios” out of Tencent, which showcases a novel technique for transferring motion from one subject to another of a completely different type. Sadly no one benchmarked it against Benedict Cumberbatch as Smaug - but the code is available so you can try for yourself. :)

Enjoy!

Fast Physics-Based Modeling of Knots and Ties Using Templates

Dewen Guo, Zhendong Wang, Zegao Liu, Sheng Li, Guoping Wang, Yin Yang, Huamin Wang

Peking University, China

Style3D Research, China

University of Utah, USA

🚧 Project: https://wanghmin.github.io/publication/guo-2025-fpb/

📄 Paper: https://wanghmin.github.io/publication/guo-2025-fpb/Guo-2025-FPB.pdf

💻 Code: N/A

❌ ArXiv: N/A

Knots and ties are captivating elements of digital garments and accessories, but they have been notoriously challenging and computationally expensive to model manually. In this paper, we propose a physics-based modeling system for knots and ties using templates. The primary challenge lies in transforming cloth pieces into desired knot and tie configurations in a controllable, penetration-free manner, particularly when interacting with surrounding meshes. To address this, we introduce a pipe-like parametric knot template representation, defined by a Bézier curve as its medial axis and an adaptively adjustable radius for enhanced flexibility and variation. This representation enables customizable knot sizes, shapes, and styles while ensuring intersection-free results through robust collision detection techniques. Using the defined knot template, we present a mapping and penetration-free initialization method to transform selected cloth regions from UV space into the initial 3D knot shape. We further enable quasistatic simulation of knots and their surrounding meshes through a fast and reliable collision handling and simulation scheme. Our experiments demonstrate the system’s effectiveness and efficiency in modeling a wide range of digital knots and ties with diverse styles and shapes, including configurations that were previously impractical to create manually.

FlexiAct: Towards Flexible Action Control in Heterogeneous Scenarios

Shiyi Zhang, Junhao Zhuang, Zhaoyang Zhang, Ying Shan, Yansong Tang

Tencent ARC Lab, China

Tsinghua Shenzhen International Graduate School, Tsinghua University, China

🚧 Project: https://shiyi-zh0408.github.io/projectpages/FlexiAct/

📄 Paper: https://arxiv.org/pdf/2505.03730

💻 Code: https://github.com/shiyi-zh0408/FlexiAct

❌ ArXiv: https://arxiv.org/abs/2505.03730

Action customization involves generating videos where the subject performs actions dictated by input control signals. Current methods use pose-guided or global motion customization but are limited by strict constraints on spatial structure, such as layout, skeleton, and viewpoint consistency, reducing adaptability across diverse subjects and scenarios. To overcome these limitations, we propose FlexiAct, which transfers actions from a reference video to an arbitrary target image. Unlike existing methods, FlexiAct allows for variations in layout, viewpoint, and skeletal structure between the subject of the reference video and the target image, while maintaining identity consistency. Achieving this requires precise action control, spatial structure adaptation, and consistency preservation. To this end, we introduce RefAdapter, a lightweight image-conditioned adapter that excels in spatial adaptation and consistency preservation, surpassing existing methods in balancing appearance consistency and structural flexibility. Additionally, based on our observations, the denoising process exhibits varying levels of attention to motion (low frequency) and appearance details (high frequency) at different timesteps. So we propose FAE (Frequency-aware Action Extraction), which, unlike existing methods that rely on separate spatial-temporal architectures, directly achieves action extraction during the denoising process. Experiments demonstrate that our method effectively transfers actions to subjects with diverse layouts, skeletons, and viewpoints.

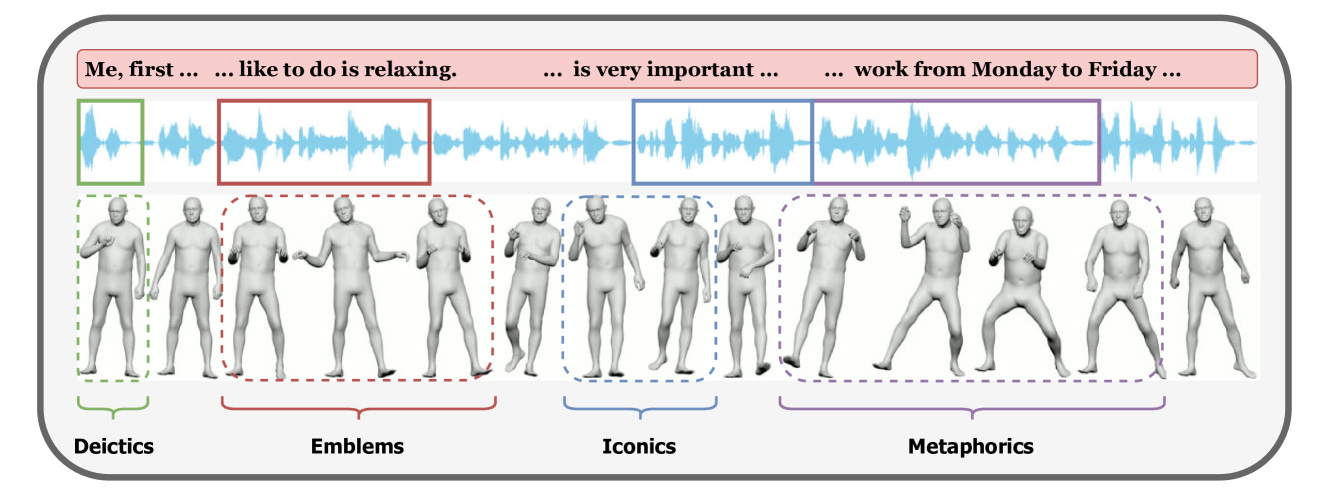

M3G: Multi-Granular Gesture Generator for Audio-Driven Full-Body Human Motion Synthesis

Zhizhuo Yin, Yuk Hang Tsui, Pan Hui

The Hong Kong University of Science and Technology

🚧 Project: N/A

📄 Paper: https://arxiv.org/pdf/2505.08293

💻 Code: N/A

❌ ArXiv: https://arxiv.org/abs/2505.08293

Generating full-body human gestures encompassing face, body, hands, and global movements from audio is a valuable yet challenging task in virtual avatar creation. Previous systems focused on tokenizing the human gestures framewisely and predicting the tokens of each frame from the input audio. However, one observation is that the number of frames required for a complete expressive human gesture, defined as granularity, varies among different human gesture patterns. Existing systems fail to model these gesture patterns due to the fixed granularity of their gesture tokens. To solve this problem, we propose a novel framework named Multi-Granular Gesture Generator (M3G) for audio-driven holistic gesture generation. In M3G, we propose a novel Multi-Granular VQ-VAE (MGVQ-VAE) to tokenize motion patterns and reconstruct motion sequences from different temporal granularities. Subsequently, we proposed a multi-granular token predictor that extracts multi-granular information from audio and predicts the corresponding motion tokens. Then M3G reconstructs the human gestures from the predicted tokens using the MGVQ-VAE. Both objective and subjective experiments demonstrate that our proposed M3G framework outperforms the state-of-the-art methods in terms of generating natural and expressive full-body human gestures.

Adjacent Research

ADC-GS: Anchor-Driven Deformable and Compressed Gaussian Splatting for Dynamic Scene Reconstruction

Introduces ADC-GS, a method that organizes Gaussian primitives into anchor-based structures for dynamic scene reconstruction, achieving faster rendering and better compression than per-Gaussian deformation approaches.

HoloTime: Taming Video Diffusion Models for Panoramic 4D Scene Generation

Presents HoloTime, a framework that converts panoramic images into immersive 4D scenes using a two-stage diffusion model and a novel 360World dataset, enhancing VR/AR experiences.arXiv+4arXiv+4arXiv+4

DLO-Splatting: Tracking Deformable Linear Objects Using 3D Gaussian Splatting

Proposes DLO-Splatting, an algorithm that tracks deformable linear objects by combining position-based dynamics with 3D Gaussian splatting, improving accuracy in complex scenarios like knot tying.arXiv+1arXiv+1

SPAST: Arbitrary Style Transfer with Style Priors via Pre-trained Large-scale Model

Introduces SPAST, a style transfer method that leverages style priors from large-scale models and a novel stylization module to produce high-quality stylized images efficiently.

A Survey of 3D Reconstruction with Event Cameras: From Event-based Geometry to Neural 3D Rendering

Provides a comprehensive review of 3D reconstruction techniques using event cameras, covering methods from geometric approaches to neural rendering, and highlighting future research directions.