Today in Character Technology - 5.12.25

State-of-the-art detail using texture-Space Guassian Avatars. Then, when it rains it pours - 3D hair reconstruction from a single image. Also, a new dataset focused on Rigging.

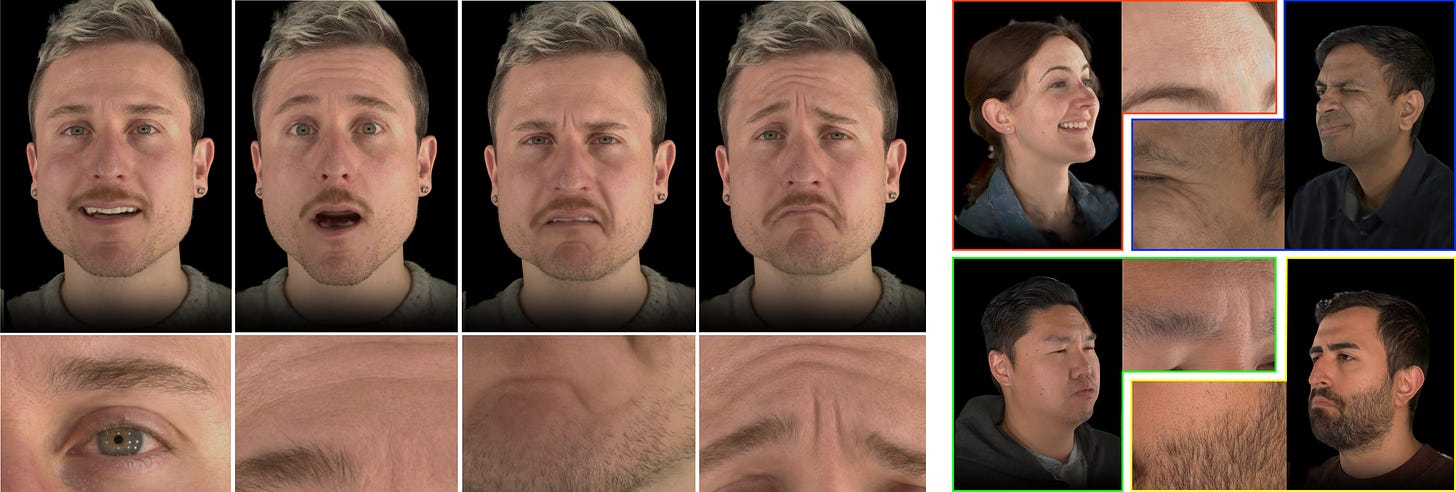

Today Google & ETH Zurich bring us another leap in Gaussian Avatars. In last Wednesday’s update, I spoke of my fondness for the last 10% and how we are starting to see many subdomains of emerging Character Technology maturing enough that the focus is turning towards achieving that last degree of realism that will make them indistinguishable from reality. Google/ETH’s TeGA brings us a step closer with a focus on achieving a much higher level of detail than we’ve seen before. The crisp details and sharp spec make this a standout paper.

Then, when it rains it pours I suppose. Following on the heels of Friday’s “GeomHair”, which focuses on accurate hair reconstruction from 3D Scans, we’re hit by DiffLocks, another Max Planck Institute collaboration focusing on hair recreation from a single image. This builds on a lot of their previous work like 2024’s HAAR: Text-Conditioned Generative Model of 3D Strand-based Human Hairstyles. While there’s a clear qualitative difference between GeomHair and DiffLocks, with GeomHair delivering far more accurate stands - it’s exciting to see efforts on both fronts that I suspect will ultimately converge around much higher quality monocular reconstruction.

And on that note - an update to GeomHair from Friday - they’ve added a new project page, and state that code is coming soon. Check it out.

Finally - something we don’t see too often, Anymate - a dataset for riggers aimed at “automatic rigging” of any shape (though technically, they really mean bones and skinning - not “rigging” in the sense a tech-artist would think of it. Still interesting nonetheless.)

Enjoy

TeGA: Texture Space Gaussian Avatars for High-Resolution Dynamic Head Modeling

Gengyan Li, Paulo Gotardo, Timo Bolkart, Stephan Garbin, Kripasindhu Sarkar, Abhimitra Meka, Alexandros Lattas, Thabo Beeler

ETH Zurich

Google

🚧 Project: syntec-research.github.io

📄 Paper: https://arxiv.org/pdf/2505.05672

💻 Code: N/A

❌ ArXiv: https://arxiv.org/abs/2505.05672arXiv

Sparse volumetric reconstruction and rendering via 3D Gaussian splatting have recently enabled animatable 3D head avatars that are rendered under arbitrary viewpoints with impressive photorealism. Today, such photoreal avatars are seen as a key component in emerging applications in telepresence, extended reality, and entertainment. Building a photoreal avatar requires estimating the complex non-rigid motion of different facial components as seen in input video images; due to inaccurate motion estimation, animatable models typically present a loss of fidelity and detail when compared to their non-animatable counterparts, built from an individual facial expression. Also, recent state-of-the-art models are often affected by memory limitations that reduce the number of 3D Gaussians used for modeling, leading to lower detail and quality. To address these problems, we present a new high-detail 3D head avatar model that improves upon the state of the art, largely increasing the number of 3D Gaussians and modeling quality for rendering at 4K resolution. Our high-quality model is reconstructed from multiview input video and builds on top of a mesh-based 3D morphable model, which provides a coarse deformation layer for the head. Photoreal appearance is modelled by 3D Gaussians embedded within the continuous UVD tangent space of this mesh, allowing for more effective densification where most needed. Additionally, these Gaussians are warped by a novel UVD deformation field to capture subtle, localized motion. Our key contribution is the novel deformable Gaussian encoding and overall fitting procedure that allows our head model to preserve appearance detail, while capturing facial motion and other transient high-frequency features such as skin wrinkling.

DiffLocks: Generating 3D Hair from a Single Image using Diffusion Models

Radu Alexandru Rosu, Keyu Wu, Yao Feng, Youyi Zheng, Michael J. Black

Max Planck Institute for Intelligent Systems

Meshcapade

Zhejiang University

Stanford University

🚧 Project: radualexandru.github.io

📄 Paper: https://arxiv.org/pdf/2505.06166

💻 Code: radualexandru.github.io

❌ ArXiv: https://arxiv.org/abs/2505.06166X

We address the task of generating 3D hair geometry from a single image, which is challenging due to the diversity of hairstyles and the lack of paired image-to-3D hair data. Previous methods are primarily trained on synthetic data and cope with the limited amount of such data by using low-dimensional intermediate representations, such as guide strands and scalp-level embeddings, that require post-processing to decode, upsample, and add realism. These approaches fail to reconstruct detailed hair, struggle with curly hair, or are limited to handling only a few hairstyles. To overcome these limitations, we propose DiffLocks, a novel framework that enables detailed reconstruction of a wide variety of hairstyles directly from a single image. First, we address the lack of 3D hair data by automating the creation of the largest synthetic hair dataset to date, containing 40K hairstyles. Second, we leverage the synthetic hair dataset to learn an image-conditioned diffusion-transformer model that generates accurate 3D strands from a single frontal image. By using a pretrained image backbone, our method generalizes to in-the-wild images despite being trained only on synthetic data. Our diffusion model predicts a scalp texture map in which any point in the map contains the latent code for an individual hair strand. These codes are directly decoded to 3D strands without post-processing techniques. Representing individual strands, instead of guide strands, enables the transformer to model the detailed spatial structure of complex hairstyles. With this, DiffLocks can recover highly curled hair, like afro hairstyles, from a single image for the first time.

Anymate: A Dataset and Baselines for Learning 3D Object Rigging

Deng, Y., Zhang, Y., Geng, C., Wu, S., Wu, J.

Stanford University, USA

University of Cambridge, UK

🚧 Project: anymate3d.github.io

📄 Paper: https://arxiv.org/pdf/2505.06227

💻 Code: anymate3d.github.io

❌ ArXiv: https://arxiv.org/abs/2505.06227

Rigging and skinning are essential steps to create realistic 3D animations, often requiring significant expertise and manual effort. Traditional attempts at automating these processes rely heavily on geometric heuristics and often struggle with objects of complex geometry. Recent data-driven approaches show potential for better generality, but are often constrained by limited training data. We present the Anymate Dataset, a large-scale dataset of 230K 3D assets paired with expert-crafted rigging and skinning information—70 times larger than existing datasets. Using this dataset, we propose a learning-based auto-rigging framework with three sequential modules for joint, connectivity, and skinning weight prediction. We systematically design and experiment with various architectures as baselines for each module and conduct comprehensive evaluations on our dataset to compare their performance. Our models significantly outperform existing methods, providing a foundation for comparing future methods in automated rigging and skinning.

Adjacent Research

Steepest Descent Density Control for Compact 3D Gaussian Splatting

Introduces SteepGS, an optimization-theoretic framework that enhances 3D Gaussian Splatting by minimizing redundancy and memory usage through principled density control, achieving a ~50% reduction in Gaussian points without compromising rendering quality.

QuickSplat: Fast 3D Surface Reconstruction via Learned Gaussian Initialization

Presents QuickSplat, a method that accelerates 3D surface reconstruction by learning data-driven priors for Gaussian initialization, resulting in an 8× speedup and up to 48% reduction in depth errors compared to existing techniques.

Image Segmentation via Variational Model Based Tailored UNet: A Deep Variational Framework

Proposes VM_TUNet, a hybrid framework combining the interpretability of variational models with the feature learning of UNet, achieving superior segmentation performance, particularly in preserving fine boundaries.